Introduction :-

The provided #AWS CloudFormation template is a fundamental building block for constructing a scalable and reliable data streaming architecture in the #Amazon Web Services (AWS) cloud environment. In today’s digital landscape, the ability to collect, process, and store real-time data is essential for numerous applications, from monitoring and analytics to data-driven decision-making. This #template is designed to facilitate the creation of an end-to-end data streaming pipeline using #Amazon Kinesis and Kinesis Firehose, key components in AWS for handling real-time data. Let’s explore the components and features of this template.

Main Features of the Template:

Parameterization: The template starts with the definition of several parameters, which make it highly configurable. It allows users to specify essential settings, such as the environment name, data retention period, and the number of shards for the #Kinesis stream. This parameterization enables flexibility and adaptability for different use cases and environments.

Kinesis Stream Creation: The core resource in this template is the creation of an #AWS Kinesis stream. Kinesis is a fully managed real-time data streaming service, making it an ideal choice for ingesting and processing large volumes of streaming data. This template configures the stream name, data retention, shard count, and encryption settings.

Kinesis Firehose Setup: Kinesis Firehose is employed as the data delivery mechanism to an Amazon #S3 bucket. The Kinesis Firehose delivery stream configuration specifies the source (Kinesis stream), buffering settings, logging, encryption, and error handling. This streamlining of data delivery is a powerful feature for simplifying data ingestion and storage.

IAM Role and Policy: The template creates an #IAM role and policy for Kinesis Firehose to assume. This role grants the necessary permissions for Kinesis Firehose to interact with both the Kinesis stream and the S3 bucket. These permissions include reading from the #S3 bucket, writing to the Kinesis stream, and putting log events.

Outputs for Reference: The template also defines output values, which are useful for referencing the created resources, their ARNs (Amazon Resource Names), and exporting them for integration with other AWS services or CloudFormation stacks.

Deployment Steps

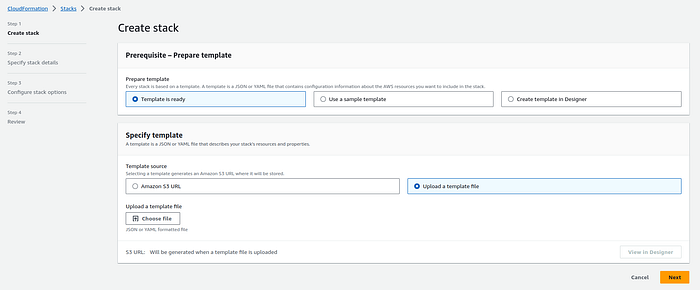

Follow these steps to upload and create the CloudFormation stack using the #AWS Management Console:

- Sign in to the AWS Management Console: Log in to your AWS account if you haven’t already.

2. Navigate to CloudFormation: Go to the #AWS CloudFormation service from the #AWS Management Console.

3. Click the “Create stack” button.

4. Upload the CloudFormation template file (YAML).

Yaml :

AWSTemplateFormatVersion: '2010-09-09'

Parameters:

EnvironmentName:

Description: Environment name for the application dev/production

Type: String

RemoteMonitoringStreamName:

Description: This will be used to name the Kinesis DataStream

Type: String

DataRetentionHours:

Description: This will be used to set the data lifetime or how many hours it will be on the kinesis stream

Type: Number

Default: 168

ShardCount:

Description: Number of shard will be use for the stream

Type: Number

Default: 1

RemoteMonitoringFirehoseDeliveryStream:

Description: Kinesis firehose stack name will use for delivery data to s3

Type: String

StorageStackName:

Description: Name of an active CloudFormation stack of storage resources

Type: String

MinLength: 1

MaxLength: 255

AllowedPattern: '^[a-zA-Z][-a-zA-Z0-9]*$'

Resources:

RemoteMonitoringKinesisStream:

Type: AWS::Kinesis::Stream

Properties:

Name: !Sub ${EnvironmentName}-${RemoteMonitoringStreamName}

RetentionPeriodHours: !Ref DataRetentionHours

ShardCount: !Ref ShardCount

StreamEncryption:

EncryptionType: KMS

KeyId: alias/aws/kinesis

RemoteMonitoringKinesisFirehoseS3DeliveryStream:

DependsOn:

- FirehoseDeliveryPolicy

Type: 'AWS::KinesisFirehose::DeliveryStream'

Properties:

DeliveryStreamName: !Sub ${EnvironmentName}-${RemoteMonitoringFirehoseDeliveryStream}

DeliveryStreamType: 'KinesisStreamAsSource'

KinesisStreamSourceConfiguration:

KinesisStreamARN: !GetAtt RemoteMonitoringKinesisStream.Arn

RoleARN: !GetAtt FirehoseDeliveryRole.Arn

ExtendedS3DestinationConfiguration:

BucketARN: !Join

- ''

- - 'arn:aws:s3:::'

- Fn::ImportValue: !Sub '${StorageStackName}-firehose-delivery'

BufferingHints:

SizeInMBs: 5

IntervalInSeconds: 300

CloudWatchLoggingOptions:

Enabled: true

LogGroupName: !Sub ${EnvironmentName}-${RemoteMonitoringFirehoseDeliveryStream}l-extendedS3-log

LogStreamName: 'S3Delivery'

CompressionFormat: 'UNCOMPRESSED'

EncryptionConfiguration:

KMSEncryptionConfig:

AWSKMSKeyARN: !Sub 'arn:aws:kms:${AWS::Region}:${AWS::AccountId}:alias/aws/kinesis'

Prefix: !Join

- ''

- - !Ref RemoteMonitoringKinesisStream

- '/year=!{timestamp:YYYY}/month=!{timestamp:MM}/day=!{timestamp:dd}/hour=!{timestamp:HH}/'

ErrorOutputPrefix: !Join

- ''

- - !Ref RemoteMonitoringKinesisStream

- 'error/!{firehose:error-output-type}/year=!{timestamp:YYYY}/month=!{timestamp:MM}/day=!{timestamp:dd}/hour=!{timestamp:HH}/'

RoleARN: !GetAtt FirehoseDeliveryRole.Arn

ProcessingConfiguration:

Enabled: false

S3BackupMode: 'Disabled'

FirehoseDeliveryRole:

Type: AWS::IAM::Role

Properties:

RoleName: !Join ['', [!Ref EnvironmentName, FirehoseDeliveryRole]]

AssumeRolePolicyDocument:

Version: 2012-10-17

Statement:

Effect: Allow

Principal:

Service: firehose.amazonaws.com

Action: 'sts:AssumeRole'

FirehoseDeliveryPolicy:

Type: AWS::IAM::Policy

Properties:

PolicyName: !Join ['', [!Ref EnvironmentName, FirehoseDeliveryPolicy]]

PolicyDocument:

Version: 2012-10-17

Statement:

- Effect: Allow

Action:

- 's3:AbortMultipartUpload'

- 's3:GetBucketLocation'

- 's3:GetObject'

- 's3:ListBucket'

- 's3:ListBucketMultipartUploads'

- 's3:PutObject'

Resource: !Join

- ''

- - 'arn:aws:s3:::'

- Fn::ImportValue: !Sub '${StorageStackName}-firehose-delivery'

- '/*'

- Effect: Allow

Action:

- 'kinesis:DescribeStream'

- 'kinesis:GetShardIterator'

- 'kinesis:GetRecords'

- 'kinesis:ListShards'

Resource: !Sub 'arn:aws:kinesis:${AWS::Region}:${AWS::AccountId}:stream/${RemoteMonitoringKinesisStream}'

- Effect: Allow

Action: 'logs:PutLogEvents'

Resource:

- !Sub 'arn:aws:logs:${AWS::Region}:${AWS::AccountId}:log-group:/aws/kinesisfirehose/${RemoteMonitoringFirehoseDeliveryStream}:log-stream:*'

Roles:

- !Ref FirehoseDeliveryRole

Outputs:

RemoteMonitoringKinesisStream:

Value: !Ref RemoteMonitoringKinesisStream

Export:

Name: !Sub ${AWS::StackName}-RemoteMonitoringKinesisStream

RemoteMonitoringKinesisStreamArn:

Value:

Fn::GetAtt: [RemoteMonitoringKinesisStream, Arn]

Export:

Name: !Sub ${AWS::StackName}-RemoteMonitoringKinesisStreamArn

RemoteMonitoringKinesisFirehoseS3DeliveryStream:

Value: !Ref RemoteMonitoringKinesisFirehoseS3DeliveryStream

Export:

Name: !Sub ${AWS::StackName}-RemoteMonitoringKinesisFirehoseS3DeliveryStream

RemoteMonitoringKinesisFirehoseS3DeliveryStreamArn:

Value:

Fn::GetAtt: [RemoteMonitoringKinesisFirehoseS3DeliveryStream, Arn]

Export:

Name: !Sub ${AWS::StackName}-RemoteMonitoringKinesisFirehoseS3DeliveryStreamArn

5. Specify Stack Details:

Enter a #Stack name for your deployment.

Provide parameter values as needed.

Review and acknowledge the capabilities.

You can set additional stack options or tags if necessary.

6. Review and Create:

Review the stack details and configuration.

Click “Create stack” to initiate the deployment.

7. Monitor Stack Creation:

The #CloudFormation stack creation process will begin.

Monitor the stack events in the #AWS Management Console.

Conclusion :-

In conclusion, the provided #AWS CloudFormation template is a powerful tool for setting up a data streaming architecture in AWS, particularly suited for real-time data ingestion, processing, and delivery. With its flexibility and scalability, it can accommodate a wide range of data streaming requirements. The combination of #Amazon Kinesis and Kinesis Firehose offers a robust solution for #real-time data handling. This template simplifies the process of creating these resources, saving time and effort in the #deployment of a data streaming #pipeline. It’s a fundamental asset for businesses and organizations seeking to harness the potential of real-time data in their applications and decision-making processes in the AWS ecosystem.